Welcome!

Come and check out my Machine Learning and Analytics portfolio

View from Isle of Skye, Scotland. Photo: Ozan Aygun

Machine Learning and Analytics Portfolio

It's time for spring cleaning and I have been compiling some of my portfolio in Machine Learning and Analytics.

I wrote up some of my work which you may find useful if you are learning how to approach a data analysis task and have little experience.

Along with the reproducible code examples, I explain my 4-step iterative approach to machine learning and predictive analytics :

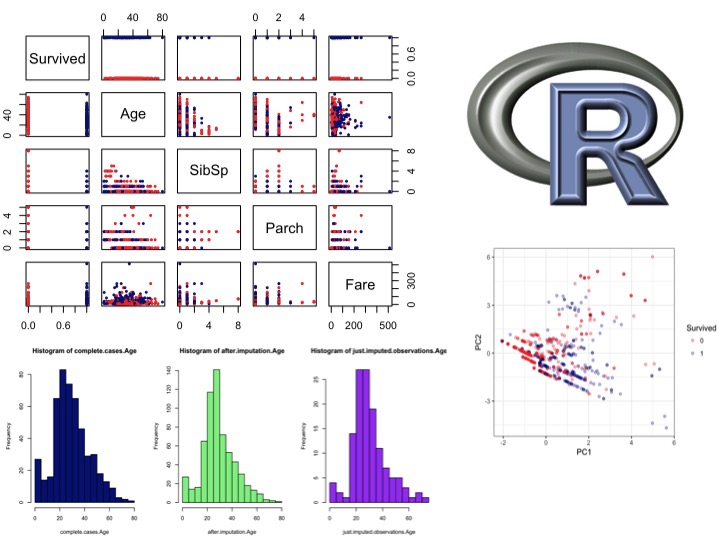

1. Data cleaning and Exploratory data analysis:

Always develop expectations from the data before starting. This includes data cleaning, Natural Language Processing (NLP), for text features,

fitting simple regression models for missing value imputation, scaling and transformations, dimension reduction and feature engineering.

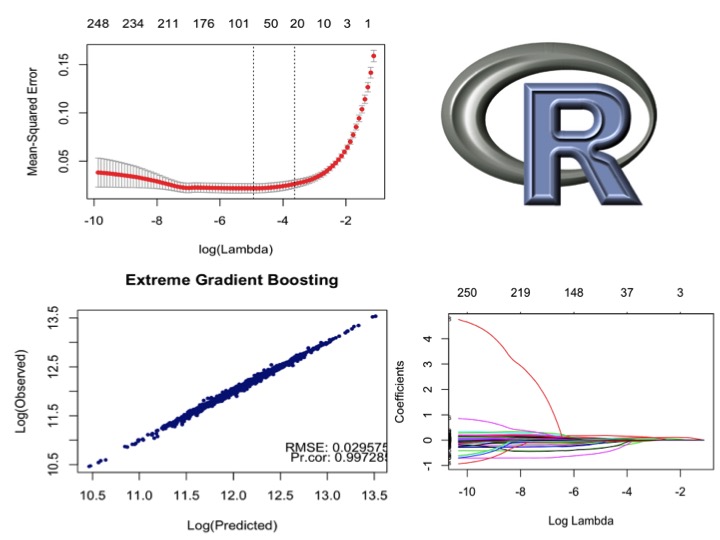

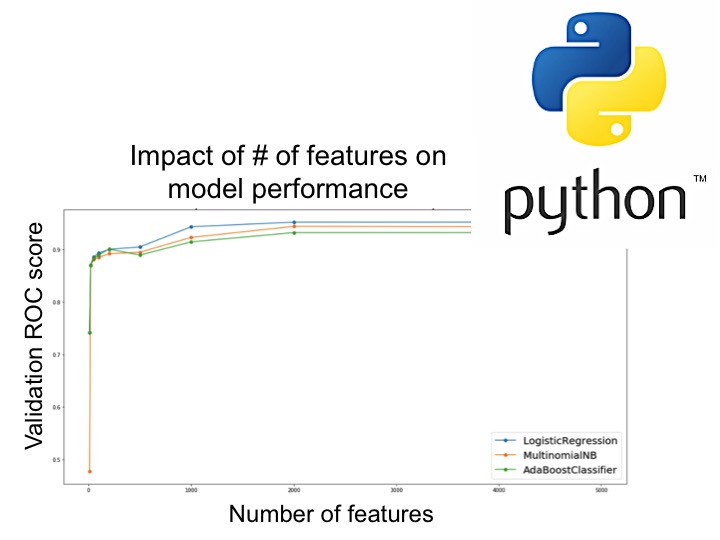

2. Always start linear: start fitting linear models to 'clean' data, including regularized models like Ridge, Lasso and Elastic Net. My

hope is to build an intuition about the performance of these linear approaches in different problems and data sets, whether

it is a classification or regression question. Understanding how much signal we can extract from a noise basket using linear

models is essential, because linear models are the ones with the best interpretability.

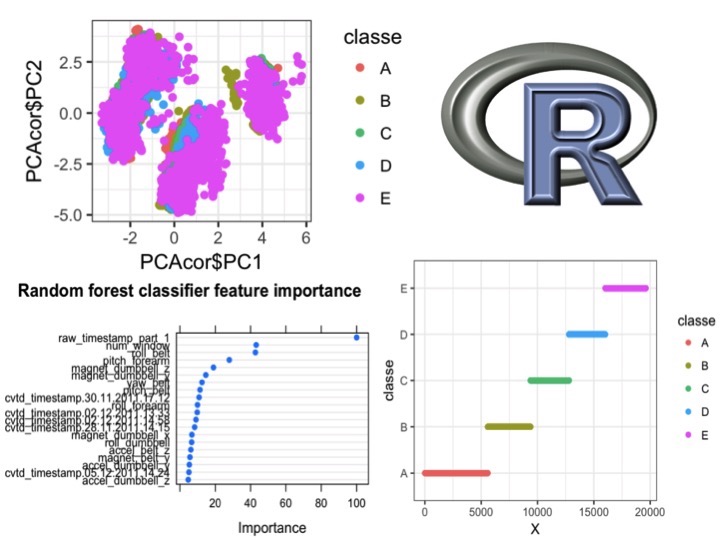

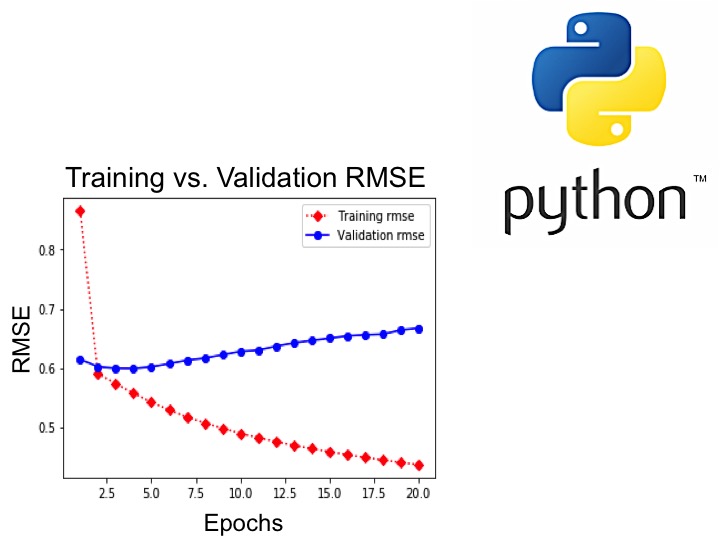

3. Train Machine Learning Algorithms: train both kernel-based (such as Support Vector Machines (SVM) and tree-based

learners (such as RandomForest or Boosted trees), develop an intuition about their performance for a particular problem.

Performing cross-validation and trying to understand for when the models start overfitting. Developing an idea of the computational cost of building

a particular classifier or regression model, for a given set of features and hyperparameters.

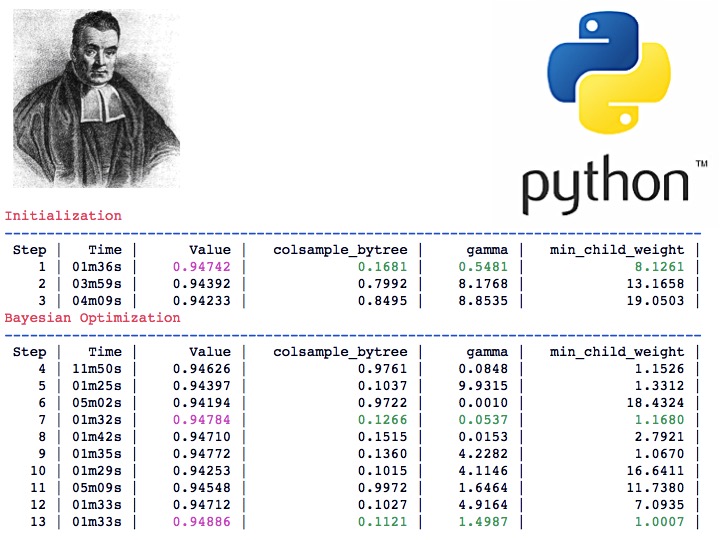

4. Hyperparameter tuning (optimization): once there is an understanding of the performance of

different models and computational costs associated with them, I perform hyperparameter optimization.

This involves considerations involving some intuition about how hyperparameters might effect the performance of the model, how much time we can effort to wait,

what would be a sensible hyperparameter space to scan, among others. Use Bayesian Optimization, GridSearch or RandomSearch with cross-validation.

Whenever possible, build a pipeline and perform hyperparameter optimization using the entire pipeline.

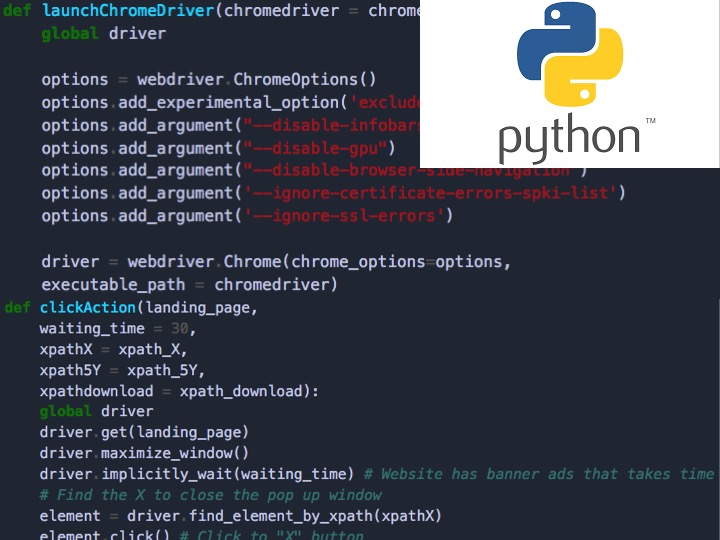

Implementation: There are many computer languages you can use, and many libraries are available

to install for a given language. Python and R are probably the most popular languages by which you can handle almost all data analysis

tasks today. Both of them are open source, and they are backed by a large community.

I believe using both R and Python makes a powerful combination, also depending on preferences of your team.

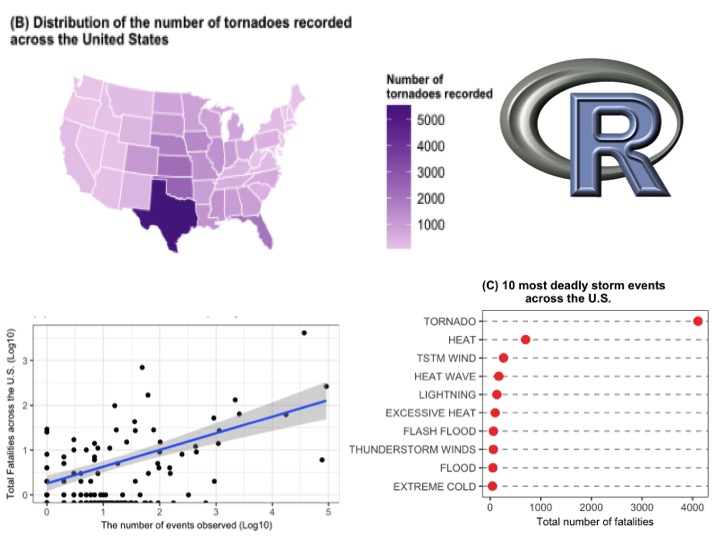

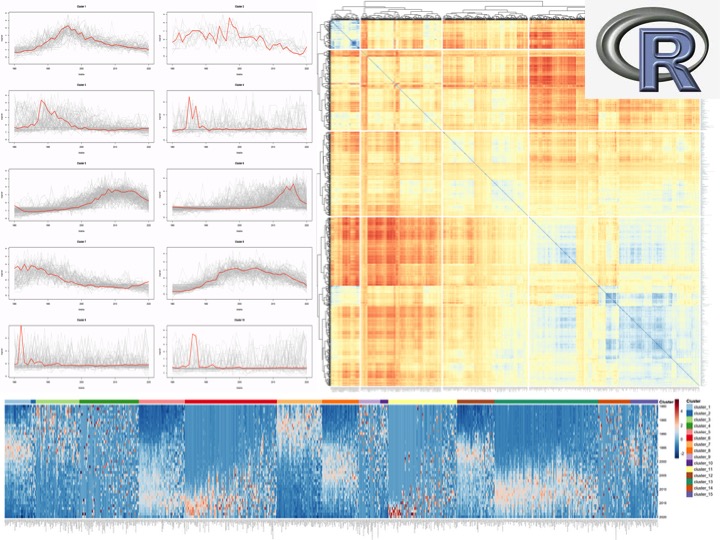

I use R to explore data sets, which has an unmatched data visualization

capacity thanks to user friendly libraries like ggplot2. Data cleaning process is a blast thanks to libraries like

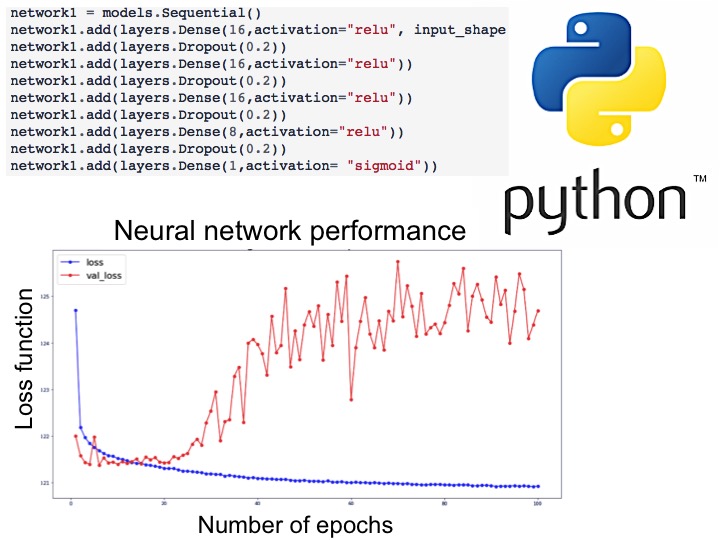

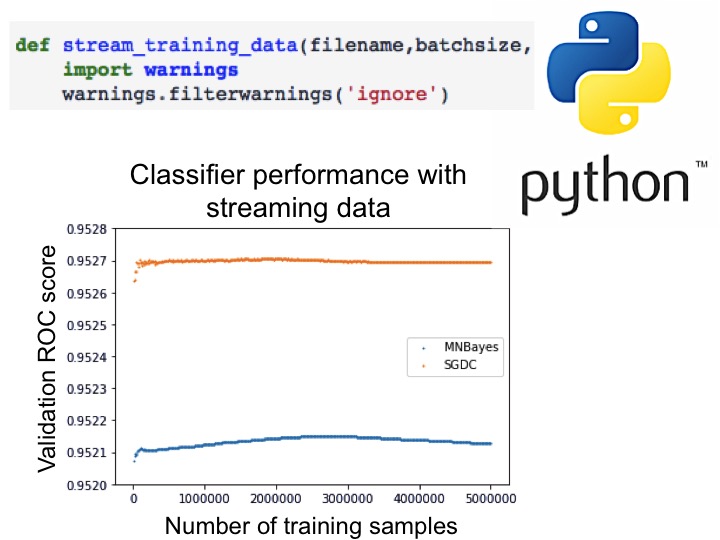

dplyr. Machine learning tasks are greatly simplified using the caret package. I use Python in parallel to R, to perform NLP, and to

train advanced machine learning algorithms. Sklearn API and its integration with many built in Python libraries, ease of configuration for distributed computation,

makes Python my choice when it comes to building machine learning pipelines and hyperparameter optimization.

About Ozan

My daily joy is to ask questions and make data speak. I equally enjoy all steps of data analysis cycle, from data wrangling to imputation, from feature engineering to dimension reduction, from fitting simple linear models to training complex machine learning algorithms and hyperparameter optimization, and the art of data communication. I am dedicated to life-long learning, and I celebrate the diversity in the teams I work with. I am fascinated to leverage data to seek answers of questions that would make an impact on life as a whole.

Contact